As we enter college essay season, the introduction of artificial intelligence (AI) tools like ChatGPT raises new questions about the best practices for generating admission materials.

- Will our clients use these tools, with or without telling us, to develop essays or short answers?

- Will we (and admissions officers) know if they do? Should we (and will they) care?

- How can we harness the power of these tools to improve our practices?

Personal Statements

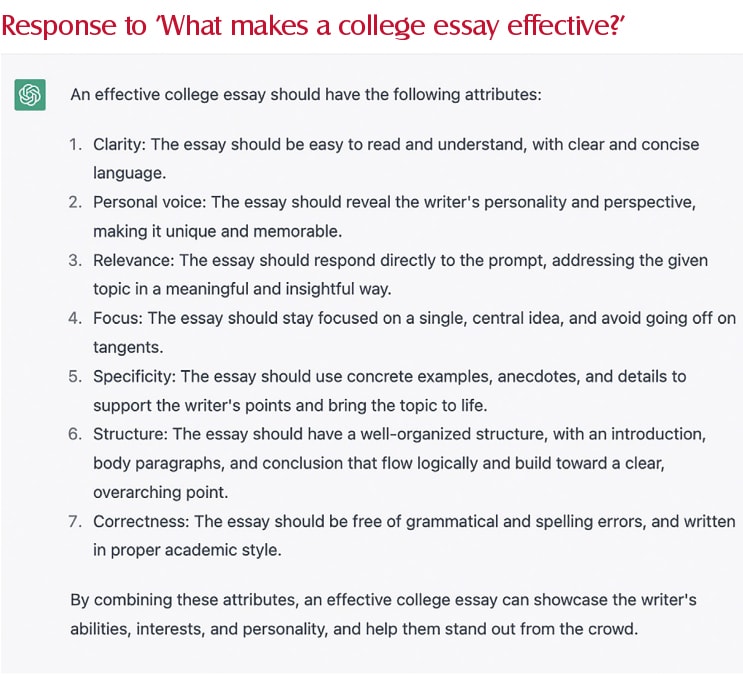

Before addressing the first question, let’s reflect on what makes a college essay effective. Just for fun, I posed this question to ChatGPT since I believe it’s important to get to know, rather than fear or think theoretically about, these tools. See the image at right for its response.

Reading this response confirmed my suspicion that ChatGPT does not possess an adequate recipe for developing what we, as experienced professionals, consider to be strong college essays. Artificial Intelligence tools only know what they have learned from past data and are not capable of original thought or insight. While we may agree or disagree with some points in ChatGPT’s list, the second point shows that these tools cannot be used to write effective essays unless our clients do the critical thinking required to define their unique experiences and perspectives.

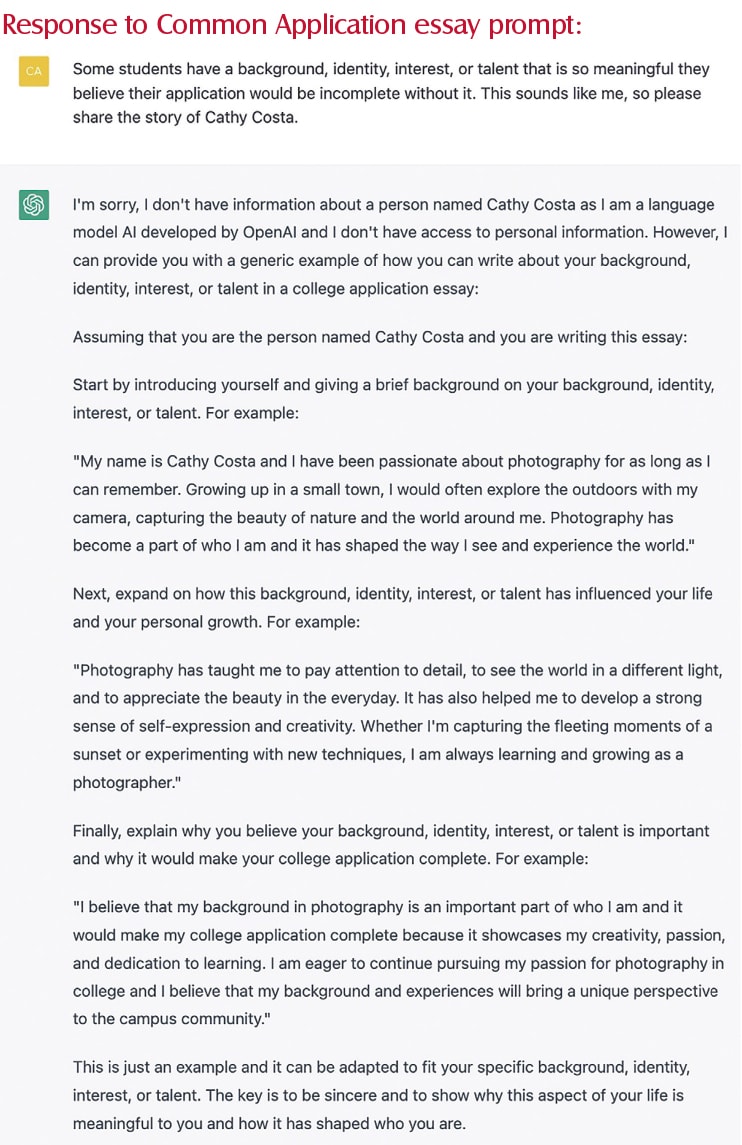

To measure its capacity to capture any individual’s story, I put one of the Common Application essay prompts into ChatGPT. See the second image at right for its response.

This response demonstrates that the current version of ChatGPT generates generic responses and cannot scour the web and capture what is unique or interesting about a particular individual. Thus, to support students in developing strong personal statements, we will still need to help them use various brainstorming methods and exercises to reflect and identify what they want to convey uniquely to colleges through their essays.

• Tools such as questionnaires, values and soundbite exercises, and brainstorming activities are designed to draw out our students’ unique strengths and how they envision contributing to a college community. This is where the critical thinking about a college essay resides, and it cannot be replaced by AI.

• Tools such as questionnaires, values and soundbite exercises, and brainstorming activities are designed to draw out our students’ unique strengths and how they envision contributing to a college community. This is where the critical thinking about a college essay resides, and it cannot be replaced by AI.

• A short writing exercise helps us see how a student writes on a simple topic, and to hear their “voice.” Are they naturally funny, self-deprecating, insightful, great storytellers, unacquainted with punctuation, or focused on tiny details? These exercises help identify what is unique about a student’s voice and encourage them to honor and harness that to their advantage. Sounding like everyone else is not a positive when it comes to college essay writing, and we can help students appreciate this.

• Discussing how students have unique writing styles and voices may discourage them from using AI tools to craft personal statements, but let’s be honest, they will probably try using them. My experimentation has convinced me that this will yield generic results that students will likely discard or need to treat as an initial draft to refine and make their own. This will become the equivalent of basing one’s initial draft off a sample from a book or online; it is a risk that is not new to us, and we should simply continue to encourage students to personalize the generic and make it unique.

• Once any AI-generated essays are customized to reflect a student’s story, strengths, and potential contribution, they will no longer be identifiable by AI-detection software because they will have changed so substantially. Since we do not yet know how admission officers will view AI-generated content, and what (if any) detection methods they may employ, we need to emphasize that it is in our students’ best interest to put in the work and personalize any ideas that they might generate using AI (or other research) tools. If we articulate the benefits and hold students accountable for crafting truly personal statements, we need not fear their use of AI tools and may even come to embrace them for certain purposes.

Supplemental Essays

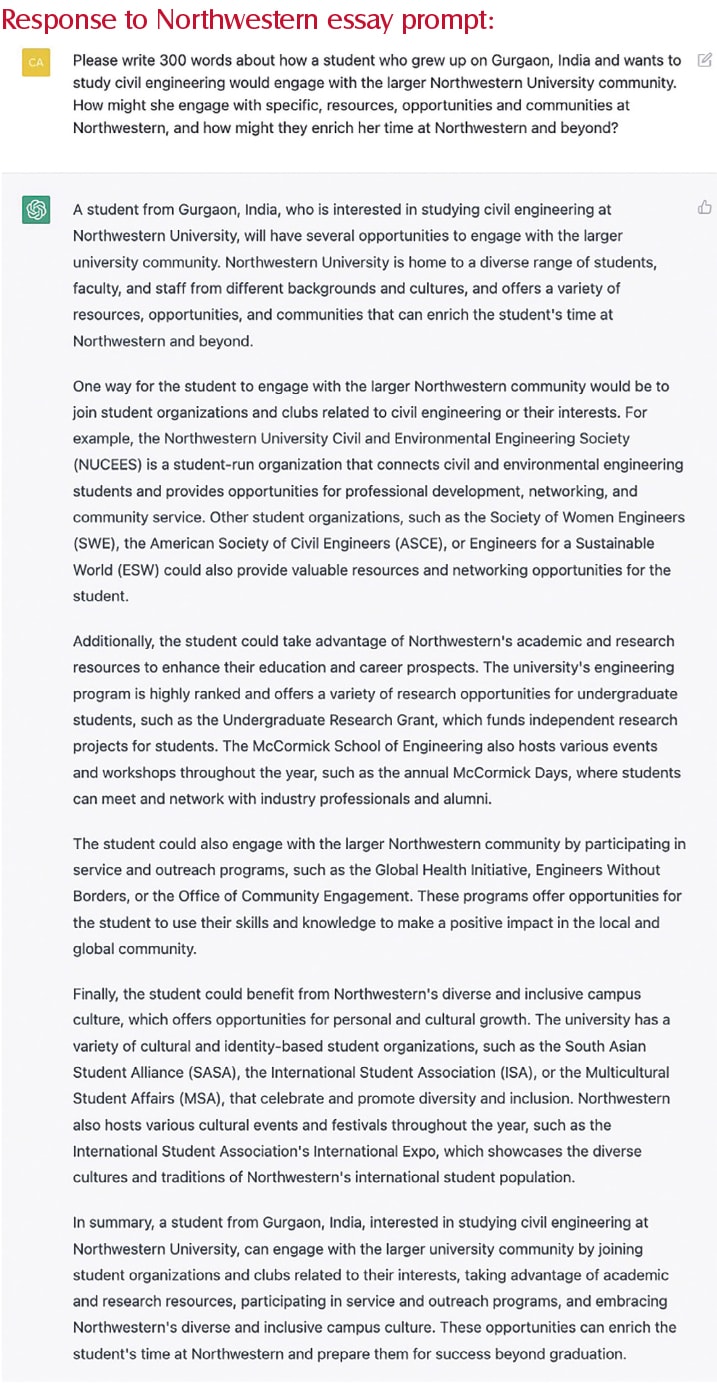

“Why College X?” and other supplemental essays require research, and we might assume that AI tools could be helpful in generating material for first drafts. To test this, I asked ChatpGPT to generate a Northwestern essay based on my prior client’s experience. See the result at right.

It generated a formulaic and robotic-sounding essay that did yield quite a few ideas warranting further research. However, I plan to warn my students that they must verify any facts generated by these tools and that admission officers who read many applications daily will come to recognize the essays that AI tools are producing. So again, it is in their best interest to customize any information generated using Google searches, AI chatbots, and other research tools. And despite experimenting by feeding ChatGPT increasingly specific and leading facts about this student, no amount of prompting helped ChatGPT generate an essay with insight and connection between the student and Northwestern’s opportunities.

It generated a formulaic and robotic-sounding essay that did yield quite a few ideas warranting further research. However, I plan to warn my students that they must verify any facts generated by these tools and that admission officers who read many applications daily will come to recognize the essays that AI tools are producing. So again, it is in their best interest to customize any information generated using Google searches, AI chatbots, and other research tools. And despite experimenting by feeding ChatGPT increasingly specific and leading facts about this student, no amount of prompting helped ChatGPT generate an essay with insight and connection between the student and Northwestern’s opportunities.

There is no comparison with the real student’s essay, which opened with “Driving the streets of Gurgaon was a maze with no rules,” followed by her description that in the US, “I was shocked by how organized driving is. Even among the notorious Boston drivers, the lane lines, traffic signals, and sidewalks felt like a utopia.” She described how these experiences sparked her interest in civil engineering, how her diverse high school experiences made her well-suited for Northwestern’s multi-disciplinary approach to learning, that she planned to double major in civil engineering and computer science and minor in manufacturing and design engineering, valued the school’s Engineering First Philosophy to solve real-world problems, and would participate in its Center for Engineering Sustainability and Resilience’s research to gain practical experience in a field she cares deeply about, improving the infrastructure in global communities. This is the quality of thinking that resulted in this student being admitted, and ChatGPT does not generate anything close.

Knowing they may still experiment with these tools, I plan to help students understand some important caveats:

• Funny (and some creepy) media reports have shown how many publicly tested AI tools, including ChatGPT and Bing, have demonstrated a concerning tendency to “hallucinate.” Bing stated its love for a New York Times reporter and insisted he was in an unhappy marriage. Bing’s FAQs state that it “aims to base all its responses on reliable sources—but AI can make mistakes, and third-party content on the internet may not always be accurate or reliable. Bing will sometimes misrepresent the information it finds, and you may see responses that sound convincing but are incomplete, inaccurate, or inappropriate. Use your own judgment and double-check the facts before making decisions or taking action based on Bing’s responses.”

• The way a student asks a question can lead to misleading answers and incorrect interpretations. When asked for a list of top colleges for sports marketing, ChatGPT included the University of Notre Dame. Knowing it does not offer an undergraduate program in sports management or marketing, I did some research to learn that Notre Dame offers a master of science in business analytics with a sports analytics concentration. Obviously, referencing this incorrectly as an undergraduate option in an essay would not benefit a student’s application.

• ChatGPT was “trained” using data up until September 2021. Therefore, any new developments since will not be reflected in its answers. Colleges are constantly changing their offerings and requirements, and students need to be accurate to be credible in their essays, so they need to be aware of the limitations of any AI tools that they use.

These caveats demonstrate that while students may use AI tools to generate ideas for supplemental essays, they will need to carefully fact-check the information generated; thus, they will be forced to do the research we encourage them to do anyway. In addition, students will still need to create their own reflections on how their prior experiences link to college offerings and how they would take advantage of opportunities and contribute to a campus community.

How can AI Tools Benefit our Practices?

1. AI tools can and will be used as a starting place to answer more specific supplemental essays, in the same way that many students now use Google searches to jump-start their brainstorming—but they can’t stop there. I asked ChatGPT to answer Villanova’s supplemental essay prompt: “St. Augustine said, ‘Miracles are not contrary to nature but only contrary to what we know about nature,'” and asked it to “tell me about a societal issue that you believe the wonder of technology is well-poised to help solve.” It generated a decent essay about using technology to address the impact of climate change. We must counsel students that copying such responses would not benefit them because this would be plagiarism and would not distinguish them from their peers. Still, such a response could stimulate a student to think critically, research more specifics about this topic, and create links between their personal goals and addressing their chosen issue. Grade: C, but it depends on the question.

2. AI tools can be helpful in smoothing out and reducing word count in drafted documents. I experimented in two ways:

a. Given a supplemental essay draft exceeding the word limit and asked to reduce it to a specific word count and make it sound more professional, ChatGPT did a credible job. It reduced the word count by eliminating unnecessary wordiness very well.

b. I gave ChatGPT a student’s overly long and sentimental letter of continued interest and asked it to reduce the word count and make it sound more polished. It did a fine job of reducing the length, and while the essay lost a bit of its personality, that was easily added back by inserting a few key adjectives.

A key difference: unlike the “Why College X?” essay examples above, in both these cases, the document’s content was already generated and fact-checked, and the AI tool was impacting only its presentation. Grade: B.

3. Resume generation: My students create an activities list compatible with the Common Application format using a spreadsheet I provide. I asked ChatGPT to convert one into a resume in the Harvard Business School format, and it generated a header and a list that simply repeated the worksheet’s contents. Grade: F.

In conclusion, while AI tools may provide lists of what to include in a college essay, they cannot generate the critical thinking required to create a compelling and unique personal statement. Students may still use AI tools to draft essays, but we should encourage students to personalize the generic. We should also remind them that AI tools can make mistakes and generate inappropriate or irrelevant responses, and they alone are responsible for the content and tone of their writing. Finally, let’s keep the perspective Tressie McMillan Cottem described so well in a recent article: “That’s the promise of ChatGPT and other artificial approximations of human expression. The history of technology says that these things have a hype cycle: They promise; we fear; they catch hold; they under-deliver. We right-size them. We get back to the business of being human, which is machine-proof.”

Author’s Note: During the time when this article was being written, GPT-4 was released and generated significantly better responses than the original ChatGPT, especially for supplemental essays. This evolution will undoubtedly continue. And because this article exceeded my intended word count, I asked GPT-4 to reduce the word count. You can view the results and judge for yourselves.

By Cathy Costa, MBA, IECA (MA), Costa Educational Consulting